03 Nov 2016 [notes by Johannes P. and Lukas H., expanded by C. Henkel]

A sample of the scientific photographs by Berenice Abbott is in the post for lecture two. These black-and-white pictures were made end of the 1950s when she worked for the MIT. They impressively show experiments and were used in high school books. The picture on double-slit interference with water waves is reprinted in the book by Gerry and Bruno, but without crediting B. Abbott…

A contemporary example of the ongoing discussion on quantum mechanics is provided by the International School of Physics “Enrico Fermi”, who held in July 2016 a course on the foundations of quantum theory.

On the topic of interference from C60 (fullerene) molecules: another paper by M. Arndt’s group discussing the influence of temperature on such systems was sketched. The fullerenes were heated up so that they can emit infrared photons at shorter wavelengths. With higher temperature, the number of ionized (and therefore) detected particles first went up, while keeping the interference pattern. Even higher temperatures lead to thermal ionisation and fragmentation, reducing the signal. The key observation is that at intermediate temperatures, the typical wavelengths emitted by the fullerene molecules are not small enough to distinguish between the two paths — the interference remains visible. At higher temperatures, the emission spectrum contains shorter wavelengths, and welcher Weg information becomes available — the interference gets washed out.

Discussion of Papers by Schrödinger (1935) and Zurek (2003)

Two papers where given to the students to read, Erwin Schrödinger’s “Die gegenwärtige Situation in der Quantenmechanik” (The present Situation in Quantum Mechanics, Naturwissenschaften 1935) and Wojciech H. Zurek’s “Decoherence and the transition from quantum to classical — revisited” (Physics Today 1991 and arXiv 2003).

See the literature list and especially the pages on Schrödinger’s paper and on decoherence for further information.

Schrödinger (1935)

In the first paragraph of his paper, Schrödinger points out the relation of reality and our model of it. The model is an abstract construction that focuses on the important aspects and, in doing so, ignores particularities of the system. It simplifies the reality. This approximation is arbitrarily set by humans, as he states: “Without arbitrariness there is no model.”

This simplification is done to achieve an exact way to calculate and herein lies a difficulty of quantum mechanics. The model gives / contains less information for the (final) state of a system at given initial conditions than e.g. classical mechanics.

In a later section the famous Schrödinger cat experiment is described. A very low-dose radioactive material is used as a quantum system, which produces on average one radiation particle per hour. The cat is placed in a sealed box and killed via a mechanism when the radiative decay happens and is detected. This simple setup transfers the uncertainty of the quantum world up to a macroscopic level where the cat clearly is. The wave function becomes a superposition:

If you now check after one hour or so whether or not the cat is still alive, you get only one definite outcome. In the words of the Copenhagen interpretation: the wave function collapses into one of the states composing it, “dead” or “alive” (but not both). From a single measurement, you do not prove interference. To do so, the experiment must be modified in such a way to measure a quantity that is sensitive to the superposition. It then has to be repeated many times with identical initial conditions. The many outcomes taken together produce an interference pattern (bright and dark fringes) that can be interpreted as a wave phenomenon.

The Quantum Zeno Effect, based on the antique Paradox of Zeno, uses the collapse of the wave function to “lock” a system in a certain state by frequently measuring it, despite other effects like radioactivity or counteracting magnetic fields. This means that if you look “frequently enough” at Schrödinger’s cat, it is immortal.

Zurek (1991/2003)

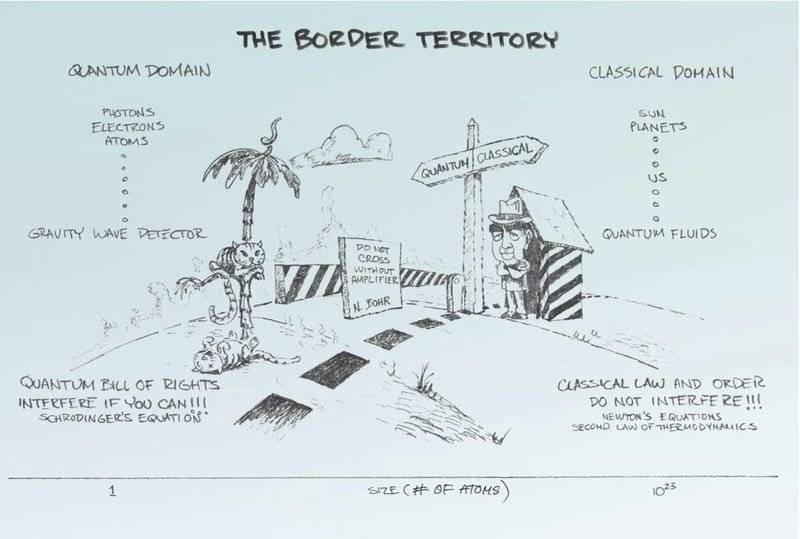

Drawing by W. H. Zurek from the 1991 paper in Physics Today, re-posted by xts on Physics Forums

Zurek compares classical physics to quantum mechanics via experiments done in different domains of physics. An example is given by a gravitational wave detector, which has to cope with the indeterminate force on a mirror arising from the momentum transfer of photons. He also states the need for an amplifier to measure quantum effects because these usually occur on tiny scales.

But this border between the macroscopic and microscopic is undefined itself (picture above). Often it is a matter of the necessary precision which leads to the definition of this line and suggests the simplifications we make. The “many-worlds interpretation” goes so far to push it back into the conciousness of the observer, which is notably an “unpleasant location to do physics” (Zurek). A more detailed discussion of Zurek’s paper and the concept of “decoherence” that he I promoted can be found elsewhere on this Blog.

Copenhagen Interpretation

The Copenhagen Interpretation was discussed with emphasis on three key points:

- Detection Detection of a quantum state often needs amplification and is irreversible, because it collapses the wave function, thus erasing the information contained in the superposition of states beforehand. Born’s rule gives the probability of each outcome as |Ψ|2 (in a suitable basis related to the measured observable) or |〈out|Ψ 〉|2 (using the state |out 〉 for the measurement outcome).

- Evolution The evolution through space and time is given by the wave function Ψ(r,t), solution to the time-dependent Schrödinger equation.

- Preparation Ψ(r,0) “encodes” the instructions how to prepare the system which is important for continous measurement to get statistical data. More precisely, Ψ(r,0) describes a statistical ensemble of identically prepared systems on which many (independent) measurements can be made. With respect to this point, other interpretations depart by assigning the wave function to a single run of an experiment.

What does this tell us about the “reality behind” the quantum-mechanical state?

Schrödinger mentions that “indeterminate” or “fuzzy” states in quantum mechanics may not be seen like an out-of-focus photograph of reality, but instead like a accurate picture of a fog-like phenomenon (i.e., that does not contain sharp features). The wavy features of quantum phenomena (related to superposition and interference), cannot be verified from a single particle detection, however. The wave pattern we “see”, e.g in the double-split experiment, is a continuous reconstruction (or “reduction”) obtained from the statistics of individual data.

Textbook Knowledge

Question: Look through your favorite quantum mechanics textbooks and make a list with answers to the following questions:

- what is the meaning of the “state vector” (or wave function)?

- what is the meaning of “measurement” (i.e., what makes this process different so that the Schrödinger equation does not apply)?

Franz Schwabl,

Quantenmechanik – Eine Einführung, 7th edition 2007 (pp. 13-15, 39, 376, 390, 392)

Particles and systems can be described by a wave function. From the wave function there follows a probability distribution, that gives, for example, the probability to be at place x at time t. Since this description is statistical, it follows that quantum mechanics is non-deterministic. The wave function still exhibits causality, since the evolution in time follows a deterministic differential equation, the Schrödinger equation.

The probability distribution contains in general superpositions of pure states, so we have non-macroscopic systems where multiple pure states appear at the same time. When we measure such a system, it can come out/appear in different states. But for an ideal measurement (i.e., the system is not interacting with any environment other than the measurement apparatus), all future measurements (of the same observable) should reproduce the same result, it shouldn’t change uncontrollably. This can be seen as the act of measuring changing the wave function: if the system was in a superposition of states before the measurement, it gets reduced to one pure state afterwards.

(Erik T.)

Wolfgang Nolting,

Grundkurs Theoretische Physik 5/1 Quantenmechanik – Grundlagen, 8th edition (pp. 6, 82, 94ff, 134, 189–91):

The state of a system is described by a wave function. It is a solution of the Schrödinger equation, but has no measurable particle property. The wave function has a statistical character, which gives, in contrast to classical mechanics, only probabilities. It is not possible to measure the wave function directly, but it determines for example the probability density to find a particle at time t in a certain position.The statistical character is responsible for the uncertainty principle and the wave-packet spreading.

For a measurement we need 1) system, 2) apparatus and 3) observer. In contrast to classical physics (and thermodynamics), where interaction between 1) and 2) is often neglected, in quantum mechanics interaction cannot be neglected. If physical processes are so small that ℏ cannot be seen as relatively small, we find quantum phenomena in which any measurement disturbs the system massively and changes its state. A certain apparatus determines/measures a certain observable and changes the system in a specific way. Another apparatus that measures another observable, changes the system in another way. Therefore, the order of measurements, which apparatus is used first and which second, matters. The observable variables are assigned to operators, which do not commute — order matters.

In principle, it is not possible to measure exactly and simultaneously non-commuting operators, for example momentum and spatial position. Thus it makes no sense to talk about exact momentum and position. Quantum mechanics can answer the following type of questions: which results are possible? What is the probability that a possible value is measured? It is only possible to get from the theory a probability distribution. Experimentally, we get it by performing many measurements on the same system or measure a large number of identically prepared single systems.

(David F.)

Pierre Meystre and Murray Sargent III,

Elements of Quantum Optics, Third edition (p. 48):

“According to the postulates of quantum mechanics, the best possible knowledge about a quantum mechanical system is given by its wave function Ψ(r,t). Although Ψ(r,t) itself has no direct physical meaning, it allows us to calculate the expectation values of all observables of interest. This is due to the fact that the quantity Ψ(r,t)*Ψ(r,t) d3r is the probability of finding the system in the volume element d3r. Since the system described by Ψ(r,t) is assumed to exist, its probability of being somewhere has to equal 1.”

(Gino W.)

Walter Greiner,

Quantenmechanik Einführung, 6., überarbeitete und erweiterte Auflage 2005 (pp. 457–58, 464):

Quantum mechanics is an indeterministic theory. This means that there exist physical measurements, whose results are not uniquely determined by the state of the observed system before the measurement took place (as far as such a state is observable). In the case of a wave function, which is not an eigenstate of the observable’s corresponding operator, it is not possible to predict the exact outcome of the measurement. Only the probability of measuring one of the possible outcomes can be presented.

To avoid the problem of indeterminism it was suggested, that particles in the same state only seem to be equal, but actually possess additional properties that determine the outcome of further experiments. Concepts based on this idea have become known as theories of hidden variables. However, Bell has proved a theorem which implies that results of such concepts stand in contradiction to the principles of quantum mechanics.

(Gino W.)

“Interference Pattern”, Berenice Abbott, taken from blog

“Interference Pattern”, Berenice Abbott, taken from blog